Embracing AI to Save Lives

Do assertions like “The robots are coming” and “AI will replace humans” sound familiar? The possibilities have been debated extensively. Here at Crisis Text Line, we embrace AI to help humans serve humans better! How’s that for a novel thought?

The problem at hand. Crisis Text Line sees the largest influx of texters at imminent risk of harming themselves between midnight and 4am. During this interval, it’s especially crucial to identify and engage texters at imminent risk in the shortest possible time. We define imminent risk as having suicidal desire, a specific plan, access to the means for that plan, and an imminent timeframe for suicide. These texters are in immediate danger, and we must act fast.

Definitions

The Platform – The secure system through which Crisis Counselors engage with texters.

The Queue – The queue is monitored 24/7 by Crisis Counselors. As a new texter sends their first few messages describing their crisis, they get added to the queue and wait to be connected with a Crisis Counselor.

Imminent risk texters – Any texter expressing suicidal ideation is assessed for the level of risk. A texter who is ideating suicide, has a specific plan, access to the means for suicide, and a timeframe for their plan of 24 hours or less is said to be at imminent risk. Crisis Counselors work with them to de-escalate the situation or, in situations where the texter is unwilling or unable to plan for their own safety, contact emergency services.

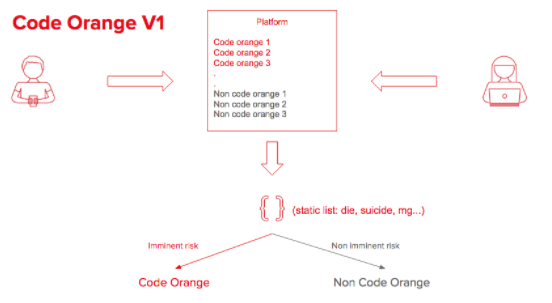

Starting with human instinct. When Crisis Text Line launched in 2013, the initial queueing solution was simple: What words are likely to occur in a texter’s first few messages that would indicate high risk? We curated a list of 50 words based primarily on instinct, including “die,” “cut,” and “suicide,” and built a text parser around it. The parser would search a conversation’s opening messages for these keywords; if any occurred, the conversation would automatically be flagged as imminent risk, rise to the top of the queue, highlighted in orange. We named this solution “Code Orange V1.”

Human instinct can only go so far. While Code Orange V1 did reasonably well in identifying imminent risk texters, it had some limitations:

False alarms. A message like “I’m not suicidal, just depressed” would still be labelled as imminent risk due to the presence of the word “suicidal”.

Missed imminent risk. A message like “I am walking towards the bridge” would not be captured as imminent risk.

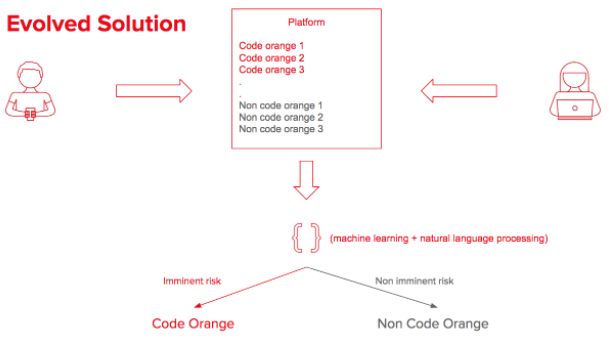

Transcending from humans to machines. Seeing these limitations, we knew we needed a more nuanced solution. We began leveraging the power of the exciting fields of machine learning and natural language processing. Our search began by experimenting with standard classification methods like naive bayes, random forest, and support vector machines (you can learn more about these topics here). However, we realized that our corpus is unique, as it’s built on texters’ relatively informal language. Most of the available open-source and off-the-shelf solutions are based on formal language, so we needed to create a custom solution.

The core classification capabilities of the subsequent solution are based on a pointwise mutual information (PMI) method, powered by N-grams features (words/phrases). In simple terms, given a feature and two known outcomes (Code Orange and Non Code Orange), PMI suggests the outcome with which the feature is most closely associated. The model was trained by extracting features from the first few messages sent by texters who ultimately ended up being classified by Crisis Counselors as high-risk in a post-conversation survey.

AI exceeded human gut feel. Armed with the evolved design, we now can distinguish imminent risk texters from non-imminent risk texters with a remarkable accuracy of 90.2%. Tested on a sample set of over 45,000 new conversations, the new design resulted in a 52% decrease in false alarms and a 13% decrease in miss rate, ultimately reducing wait times for texters at imminent risk.

Examples where the machine learning solution did better than Code Orange V1 (paraphrased for texter privacy):

In identifying previously missed imminent risk conversations:

- “Help me. I’m so depressed and just want to take my life.”

- “I feel awful. I feel like just driving off the bridge.”

- “Oh my god I just took a whole bottle of Ibuprofen and I already regret it.”

In reducing false alarms:

- “I’m in love with a girl who wouldn’t even care if i died.”

- “My friend died by suicide a while back and I need someone to talk to about it.”

- “I lost a friend to suicide two months ago and I don’t think I’ll ever get over it.”

But we’re not complacent! We operate with a start-up mentality: “a fast moving rocket ship,” as Founder and CEO Nancy Lublin likes to say. We aren’t afraid of experimenting. We observed significant improvements with the new model, but we will not stop here. We are truly excited about our ongoing research in these emerging fields, exploring things like word embeddings and deep learning. Stay tuned!