Detecting Crisis: An AI Solution

Content warning: This post references words and phrases associated with suicide, in the context of how Crisis Text Line identifies texters at most imminent risk.

Editor’s Note: In July 2017, The Cool Calm presented a post on how Crisis Text Line was using machine learning to triage texters by severity. This post follows up on the evolution of that product.

It’s mid-evening on December 1, 2017. A post goes viral on Instagram, resulting in record texter volume at Crisis Text Line. Hundreds of volunteer Crisis Counselors pour into the system to respond. An opening message from one texter reads (paraphrased for confidentiality):

“I just took an overdose of Lithium and I’m letting it build up in my system for a few days.”

The triaging algorithm flags this message as high-risk for a suicide attempt, and moves it straight to the top of the queue. A Crisis Counselor responds within 20 seconds. Within an hour, the texter has been located by emergency services, and is safe. This is the power of data at scale. Here’s how we did it:

AI comes to rescue. This is where 65 million messages comes in handy! Our machine learning layer identifies 86% of people at severe imminent risk for suicide in their first conversations. The model, an ensemble of deep neural networks, learned to predict risk of suicide from all conversations tagged with “Suicide” by Crisis Counselors in a survey taken post-conversations. The interplay of model predictions and real-time feedback loop from Crisis Counselors is instrumental in retraining the model. We’re now positioned to service 94% of high-risk texters in under 5 minutes.

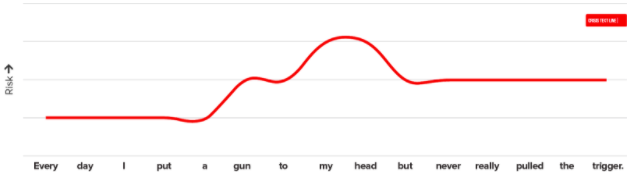

The true value of model comes from its ability to predict risk by reading variability in sentences and understanding context. See how it reads this sentence as an example:

The model also identified thousands of high-risk words and word combinations. In fact, there are many words more indicative of high-risk than the word suicide alone:

What’s coming next? We see three major of areas of impact as we continue to develop the model:

- Improvement in wait times. As the accuracy levels of the algorithm increase, high-risk texters will be served even more quickly, especially during the late night high volume period (12am – 6am). We anticipate an increase of ~72,000 additional high-risk texters served in under 5 minutes per year.

- Improvement in detection. Currently, our machine learning only reads the opening messages. An improved model will be able to detect and adjust risk during the course of a conversation. We expect machine learning to detect suicide risk 5-10 minutes faster than a human Crisis Counselor can.

- International expansion. As we expand internationally and into other languages, how do we ensure the model is tuned to react to culturally-specific words and phrases? Stay tuned for updates!